3.6 爬虫程序(三)——抓取腾讯社会招聘相关信息

目标:抓取腾讯招聘官网(http://hr.tencent.com/position.php)职位招聘信息并保存为JSON格式

(有待加资料描述)

新建工程

scrapy startproject itzhaopin

1.Item.py

from scrapy.item import Item, Field

class TencentItem(Item):

name = Field() # 职位名称

catalog = Field() # 职位类别

workLocation = Field() # 工作地点

recruitNumber = Field() # 招聘人数

detailLink = Field() # 职位详情页链接

publishTime = Field() # 发布时间

2.pipeline.py

from scrapy import signals

import json

import codecs

class JsonWithEncodingTencentPipeline(object):

def __init__(self):

self.file = codecs.open('tencent.json', 'w', encoding='utf-8')

def process_item(self, item, spider):

line = json.dumps(dict(item), ensure_ascii=False) + "\n"

self.file.write(line)

return item

def spider_closed(self, spider):

self.file.close()

3.setting.py

BOT_NAME = 'itzhaopin'

SPIDER_MODULES = ['itzhaopin.spiders']

NEWSPIDER_MODULE = 'itzhaopin.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'itzhaopin (+http://www.yourdomain.com)'

ITEM_PIPELINES = {

'itzhaopin.pipelines.JsonWithEncodingTencentPipeline': 300,

}

LOG_LEVEL = 'INFO'

4.tencent_spider.py

#coding:utf-8

import re

import json

from scrapy.selector import Selector

try:

from scrapy.spider import Spider

except:

from scrapy.spider import BaseSpider as Spider

from scrapy.utils.response import get_base_url

from scrapy.utils.url import urljoin_rfc

from scrapy.contrib.spiders import CrawlSpider, Rule

from scrapy.contrib.linkextractors.sgml import SgmlLinkExtractor as sle

from itzhaopin.items import *

from itzhaopin.misc.log import *

class TencentSpider(CrawlSpider):

name = "tencent"

allowed_domains = ["tencent.com"]

start_urls = [

"http://hr.tencent.com/position.php"

]

rules = [ # 定义爬取URL的规则

Rule(sle(allow=("/position.php\?&start=\d{,4}#a")), follow=True, callback='parse_item')

]

def parse_item(self, response): # 提取数据到Items里面,主要用到XPath和CSS选择器提取网页数据

items = []

sel = Selector(response)

base_url = get_base_url(response)

sites_even = sel.css('table.tablelist tr.even')

for site in sites_even:

item = TencentItem()

item['name'] = site.css('.l.square a').xpath('text()').extract()

relative_url = site.css('.l.square a').xpath('@href').extract()[0]

item['detailLink'] = urljoin_rfc(base_url, relative_url)

item['catalog'] = site.css('tr > td:nth-child(2)::text').extract()

item['workLocation'] = site.css('tr > td:nth-child(4)::text').extract()

item['recruitNumber'] = site.css('tr > td:nth-child(3)::text').extract()

item['publishTime'] = site.css('tr > td:nth-child(5)::text').extract()

items.append(item)

#print repr(item).decode("unicode-escape") + '\n'

sites_odd = sel.css('table.tablelist tr.odd')

for site in sites_odd:

item = TencentItem()

item['name'] = site.css('.l.square a').xpath('text()').extract()

relative_url = site.css('.l.square a').xpath('@href').extract()[0]

item['detailLink'] = urljoin_rfc(base_url, relative_url)

item['catalog'] = site.css('tr > td:nth-child(2)::text').extract()

item['workLocation'] = site.css('tr > td:nth-child(4)::text').extract()

item['recruitNumber'] = site.css('tr > td:nth-child(3)::text').extract()

item['publishTime'] = site.css('tr > td:nth-child(5)::text').extract()

items.append(item)

#print repr(item).decode("unicode-escape") + '\n'

info('parsed ' + str(response))

return items

def _process_request(self, request):

info('process ' + str(request))

return request

5.添加/itzhaopin/itzhaopin 目录下一文件夹 misc:

__init__.py: 作为启动文件,内容暂空

log.py:

from scrapy import log

def warn(msg):

log.msg(str(msg), level=log.WARNING)

def info(msg):

log.msg(str(msg), level=log.INFO)

def debug(msg):

log.msg(str(msg), level=log.DEBUG)

执行

scrapy crawl tencent

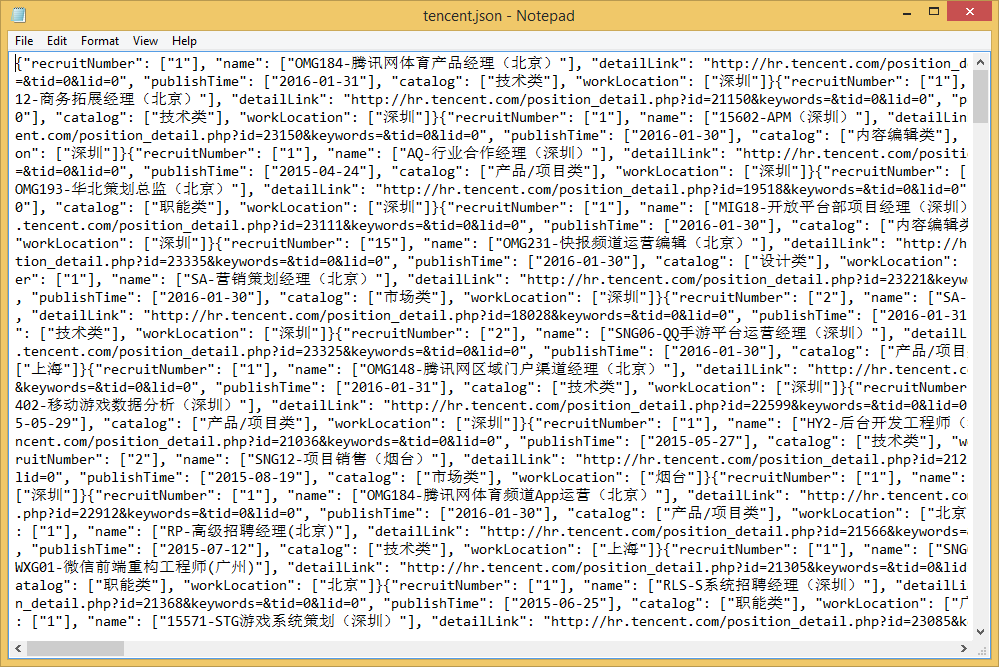

执行后,生成json输出文件:

JSON文件转换SQL:json2sql.py

#-*- coding: UTF-8 -*-

import json

data = []

with open('itzhaopin/tencent.json') as f:

for line in f:

data.append(json.loads(line))

#print json.dumps(data, ensure_ascii=False)

str = "\r\n"

for item in data:

#print json.dumps(item)

str = str + "insert into tencent(name,catalog,workLocation,recruitNumber,detailLink,publishTime) values "

str = str + "('%s','%s','%s','%s','%s','%s');\r\n" % (item['name'],item['catalog'],item['workLocation'],item['recruitNumber'],item['detailLink'],item['publishTime'])

import codecs

file_object = codecs.open('tencent.sql', 'w' ,"utf-8")

file_object.write(str)

file_object.close()

print "success"

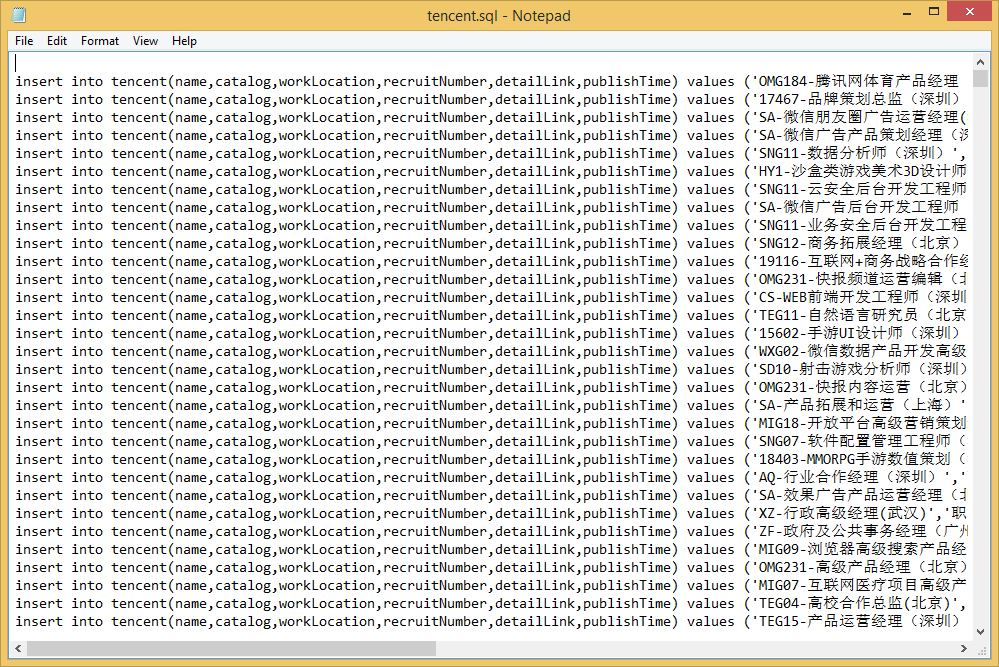

转换成功,生成tencent.sql文件:

原创: