3.4 爬虫程序(一)——爬取CSDN博客文章以及链接

创建project

scrapy startproject CSDNBlog

1.编写item.py

# -*- coding:utf-8 -*-

from scrapy.item import Item, Field

class CsdnblogItem(Item):

"""存储提取信息数据结构"""

article_name = Field()

article_url = Field()

这次爬虫主要抓取name和URL

2.编写pipeline.py

import json

import codecs

class CsdnblogPipeline(object):

def __init__(self):

self.file = codecs.open('CSDNBlog_data.json', mode='wb', encoding='utf-8')

def process_item(self, item, spider):

line = json.dumps(dict(item)) + '\n'

self.file.write(line.decode("unicode_escape"))

return item

主要是用了创建和打开保存文件json,process_item写入对item的数据处理,写入json形式的输出文件中。

3.编写setting.py

# -*- coding:utf-8 -*-

BOT_NAME = 'CSDNBlog'

SPIDER_MODULES = ['CSDNBlog.spiders']

NEWSPIDER_MODULE = 'CSDNBlog.spiders'

#(添加代码)禁止cookies,防止被ban

COOKIES_ENABLED = False

ITEM_PIPELINES = {

'CSDNBlog.pipelines.CsdnblogPipeline':300

}

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'CSDNBlog (+http://www.yourdomain.com)'

ITEM_PIPELINES类型为字典,用于设置启动的pipeline,其中key为定义的pipeline类,value为启动顺序,默认0-1000。要是这里没有进行设置的话,pipeline.py将不会启动运行

4.爬虫编写(重头戏) 在spider文件下新建CSDNBlog_spider.py

#!/usr/bin/python

# -*- coding:utf-8 -*-

# from scrapy.contrib.spiders import CrawlSpider,Rule

from scrapy.spider import Spider

from scrapy.http import Request

from scrapy.selector import Selector

from CSDNBlog.items import CsdnblogItem

class CSDNBlogSpider(Spider):

"""爬虫CSDNBlogSpider"""

name = "CSDNBlog"

#减慢爬取速度 为1s

download_delay = 1

allowed_domains = ["blog.csdn.net"]

start_urls = [

#第一篇文章地址

"http://blog.csdn.net/u012150179/article/details/11749017"

]

def parse(self, response):

sel = Selector(response)

#items = []

#获得文章url和标题

item = CsdnblogItem()

article_url = str(response.url)

article_name = sel.xpath('//div[@id="article_details"]/div/h1/span/a/text()').extract()

item['article_name'] = [n.encode('utf-8') for n in article_name]

item['article_url'] = article_url.encode('utf-8')

yield item

#获得下一篇文章的url

urls = sel.xpath('//li[@class="next_article"]/a/@href').extract()

for url in urls:

print url

url = "http://blog.csdn.net" + url

print url

yield Request(url, callback=self.parse)

【1】yield用法比return要好

【2】如何获取到下一篇文章是关键

【3】UTF-8 编码

执行

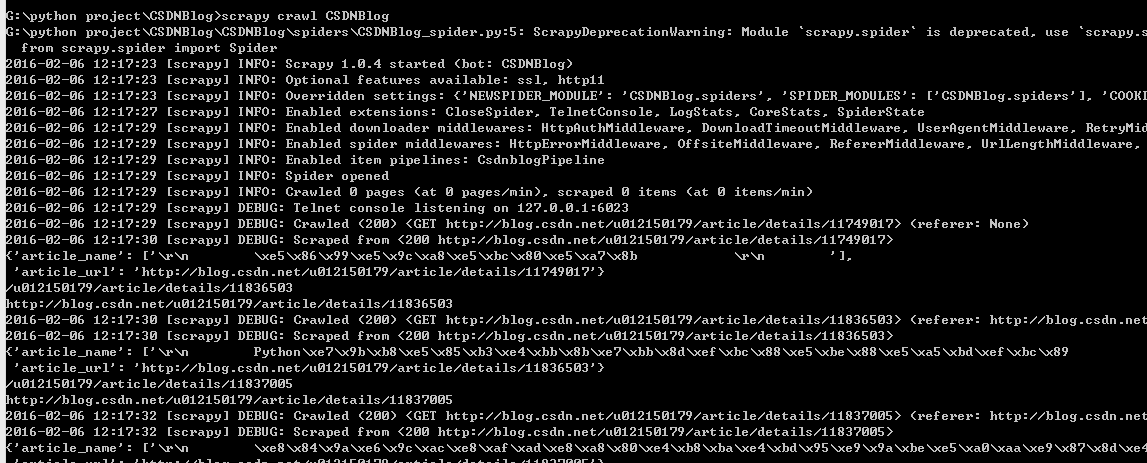

scrapy crawl CSDNBlog

执行数据截图:

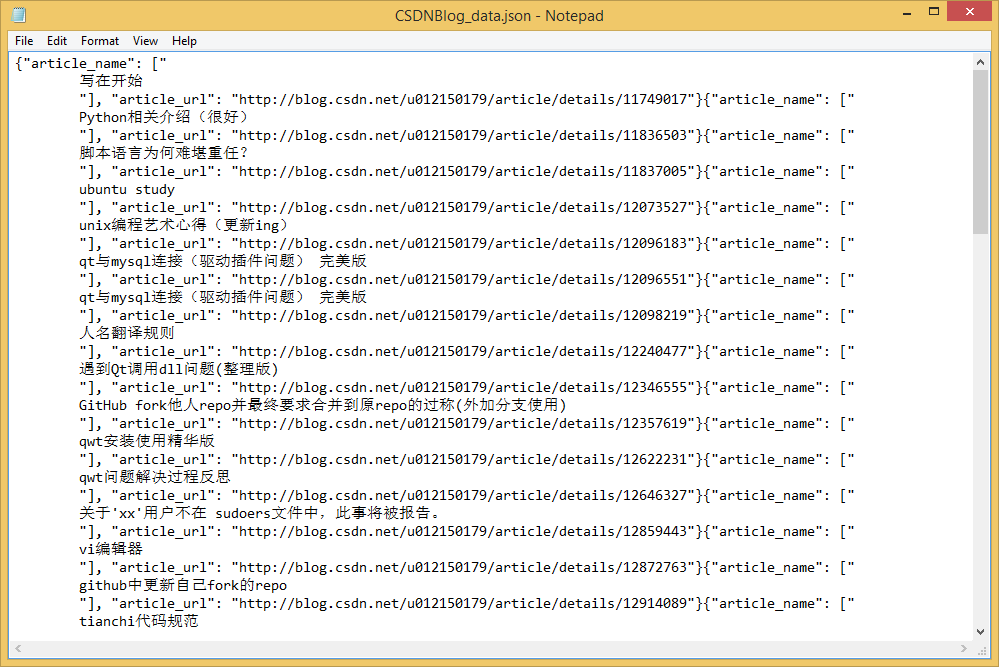

输出的CSDNBlog_data.json(部分截图) :

【摘】转载注明:http://blog.csdn.net/u012150179/article/details/34486677